Long-tail learning refers to the process of training a model using a dataset that exhibits a long-tail distribution, thereby enabling the model to achieve good generalization performance across all categories. Current long-tail learning methods can be mainly categorized into class rebalancing, data augmentation, decoupled learning, and ensemble learning, among others. However, few studies have examined this issue from the perspective of optimization conflicts in the long-tail representation learning process, resulting in representations learned by these methods still being dominated by certain categories.

In this research, we first observed through extensive experiments the gradient conflict problems and corresponding imbalances that exist in the optimization process of mainstream long-tail learning methods. Multi-objective optimization methods are considered an ideal solution to address this issue.

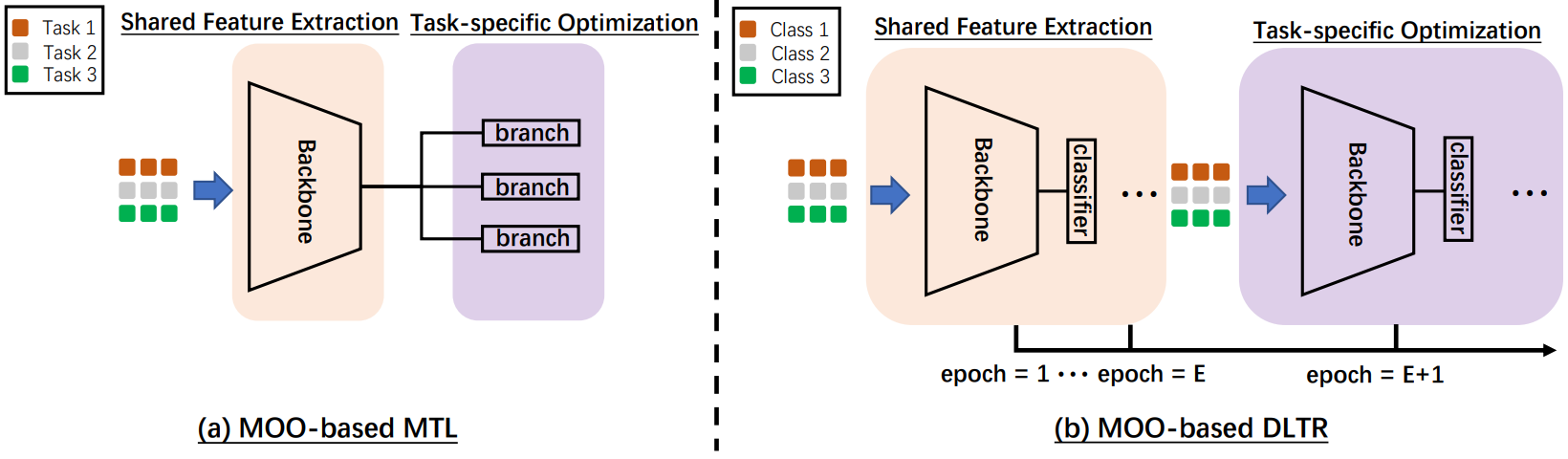

Fig.1: Illustration of PLOT

Furthermore, this study integrates various long-tail learning methods with multi-objective optimization techniques, demonstrating the significant potential of multi-objective optimization in solving this problem. Additionally, the research provides generalization and convergence guarantees under the long-tail setting based on multi-objective optimization. Experimental results on multiple mainstream long-tail learning public datasets indicate that the proposed method possesses plug-and-play and universally applicable advantages.

Zhipeng Zhou, is a doctoral candidate in the School of Computer Science and Technology, University of Science and Technology of China, and the corresponding author, Wei Gong, is a professor in the School of Computer Science and Technology, University of Science and Technology of China. This research was supported by the National Natural Science Foundation of China (NSFC) projects (No. 61932017 and 61971390).

Link:https://openreview.net/forum?id=b66P1u0k15